The tale of the "O2 Fail" (starring the iPhone 3GS)

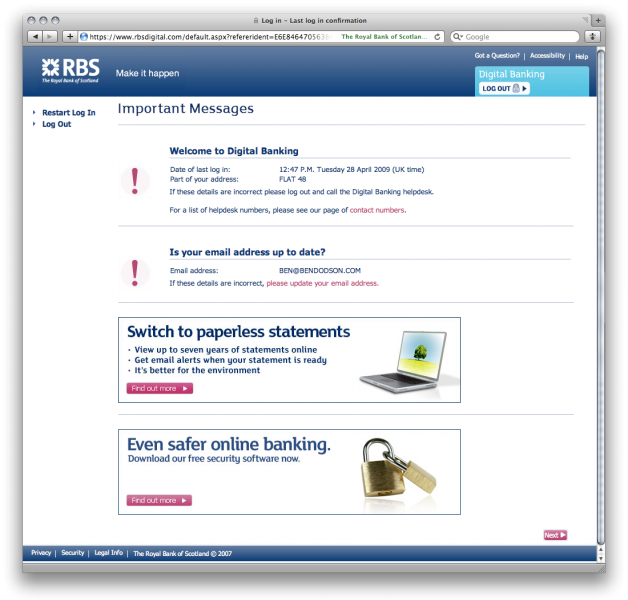

Ever since the iPhone 3GS was announced in the Apple WWDC Keynote last week, the internet has been ablaze with criticism about both AT&T and O2 with regards to their upgrade policies to the new phone for existing iPhone 3G customers. I have battled long and hard on Twitter and on the O2 Forums to try and put across that they have in fact done nothing wrong, it is the public that are misguided, yet this has fallen on relatively deaf ears. I decided it would be easier to put one whole post together about the "O2 Fail" (or #o2fail for you Tweeters) and the rational response to it so I could just point people in this direction rather than re-explaining myself over and over!

Disclaimer: I don't work for O2 and never have done. I used to be on the 3 mobile network in the UK as it was the cheapest before switching to O2 in order to get the iPhone. I bought the original iPhone about 4 months before the 3G came out (and so paid full price for it which was around £330 plus a £35 contract) and then upgraded to the iPhone 3G by paying £59 and upgrading to the £45 18-month contract. I will be buying the iPhone 3GS next Friday by taking out a second contract and letting the remaining 6 months on my current contract run out. Therefore, I have no reason to be supporting O2 as I would benefit greatly if they caved in and let people upgrade for free - the point I'm putting across is that there is good reason why they aren't doing that and people need to understand why that is.

The iPhone 3GS

During the WWDC Keynote, Apple announced that the new model of the iPhone (the iPhone 3GS - the 'S' stands for 'Speed') would be released on the 19th of June in 6 countries at a price of $199 for the 16GB model and $299 for the 32GB model. Crucially, these were prices on a specific AT&T 24-month tariff for new customers. Here in the UK, the prices vary from 'free' to £280 or so (or even £550 if you choose Pay As You Go). Again these prices are for new customers only. So, as was bound to be the case, there are a large number of iPhone 3G customers who want to upgrade to the iPhone 3GS who are being told they have to finish their existing contracts before they can get the new model. This can be done in two ways; wait until your contract ends or pay to get out of it now. This very simple issue is the basis for all of "O2 Fail" comments over the past few days. Let's look at why it has annoyed people so much...

"But last time..."

When the iPhone 3G as announced, O2 allowed original iPhone customers to terminate their contracts early at no cost and start a brand new contract. The only cost was that of the phone and was the same cost that applied to new customers. In my own case, this was around £59 as I moved up to the £45 a month contract (18 months). The reason O2 did this (when they and other carriers have never done this for a phone before) is because they didn't subsidise the original iPhone. When you went to the Apple Store or to an O2 store, you paid the full retail price (which was around £330 for the 16GB model). Therefore, the contract you were on was purely making money based on calltime - that was a pretty sweet deal for O2 and so it was surprising they allowed people to upgrade.

In any case, most people seem to think that as it happened last time it should happen this time. They have failed to grasp the crucial word 'subsidy'. The original iPhone wasn't subsidised, the iPhone 3G was (as is the iPhone 3GS). This means that a large portion of the money you now pay to O2 from your contract goes directly to Apple for the cost of the phone. It's worth pointing out that this is the same with every other phone on the market with every other carrier (unless you're using Pay As You Go in which case you pay the full price for the phone up front). So, if O2 were to say at this point "of course you can upgrade under the same conditions as last time" they would lose a HUGE amount of money as not only would they not have made any money on airtime, they would have lost the money on the subsidised phone.

What about paying off the remaining subsidy on my phone?

There have been a fair number of people asking this question - "why can't I just buy out my subsidy and get a new contract". The key problem here is that O2 would still not have made any money as they would have basically given you free airtime for the last 12 months. If you only pay your subsidised part, then they aren't making money. This is where the crucial word 'contract' comes into use as you signed an agreement to pay a certain amount of money per month to cover the cost of the phone and the airtime.

But what about customer loyalty!?!

The follow up argument to the above is "but they'd make money on the airtime in my new contact". So, you've just screwed O2 for the past 12 months by using their network without effectively paying for it and now you want a new contract where they will be able to make their money back? I don't follow that for one instant as what happens when the next iPhone comes out in 12 months ? We'll go back to square one with the argument being "they did it the last 2 times, why can't I upgrade now" which means that again O2 will go without being paid for their network usage.

Ok, how about they just add my remaining contract to my new contract?

This is a slightly harder argument as in a way it makes some sense. Rather than waiting 6 months for my contract to expire and then upgrading to a new 18 month contract, why can't O2 just let me upgrade now and add an additional 6 months to my new contract? This would work in theory but the problem is that you'll start getting people on contracts from 18-36 months and I'll guarantee that come next year they'll be the first ones to ask for another extension putting them on 24-42 month contracts and so on. This is a similar problem to people extending loan terms forever and ever in that there comes a point where they simply aren't going to pay it back. I don't know the full details but I imagine there are some laws on how many times a contract can be extended as well in order to ensure fair fiscal management but don't quote me on it!

Don't they realise we're in the middle of a recession!

This is by far the best argument I've seen used - people actually pointing out that due to the "credit crunch", O2 should be lowering prices so they can get the phone cheaper. If the recession is really that bad, don't you think it's a little stupid of you to be arguing about getting a slightly faster iPhone? Surely you should be worrying about the price of basic essentials like food, clothes, and petrol rather than the ability to get a slightly better camera in your smart phone? And how do you think mobile phone carriers are doing in the economic crisis? They need to make money as well!

The Facebook Group

One of my other favourite things during this whole saga has been the Facebook group I DONT WANT TO PAY TO UPGRADE MY IPHONE! - I don't think I even need to provide an argument against this.

My Point

I've spoken about the reasons why these arguments are flawed but my real point is that people don't "need" the iPhone 3GS so I don't understand why so many people are desperate to upgrade. I'm an iPhone developer and therefore want the new hardware so that I can write better apps that utilize it's hardware, but to everybody else there is very little gain. Sure you get a slightly better camera, the ability to film video, and a digital compass (as well as a processor and RAM upgrade) but you get far more from the 3.0 upgrade than you do from the hardware upgrade.

I suppose my real point is that there are things called "contracts" that people have entered into - they are now throwing their toys out of the pram because they can't get the latest shiny object from Apple without paying for it but unfortunately that's just how it is and O2 are not going to change their minds. Why not? Because if they did they would lose huge amounts of money. At this point people decry the greedy networks and the fact that they are going to take their business elsewhere. The crucial point is that O2 is the only network that can cope with the iPhone properly and they are the only supplier so you either stay with them or you get a Palm Pre, a Google Android, or one of the new Windows Mobile phones from another supplier. But, I can guarantee that in 12 months when a new model of those phones comes out, the networks won't let you upgrade early for free so you'll be back where you started.... just with a rubbish phone instead!

The key point is that the iPhone 3GS has not been designed for existing iPhone 3G customers - it has been designed specifically to address the concerns of people who were deciding whether to get one of the other smartphones I mentioned or an iPhone. They have added MMS, Video, and a better camera - the three major flaws with the iPhone that any undecided customer would count as negatives. If you're an iPhone 3G user at present, I highly doubt that you are going to stop becoming an iPhone user over this issue as you have already been sold on the idea of the phone and you know that no other phone currently suits your needs.

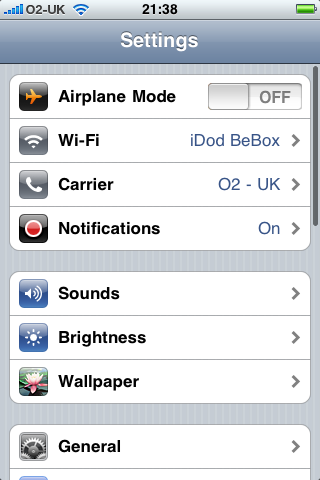

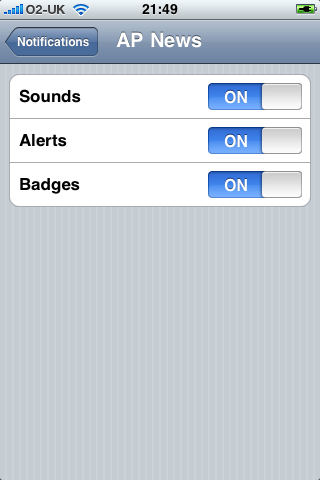

Besides, as a network O2 has proved itself to be far better than many other iPhone carriers (*cough* AT&T *cough*). They are supporting MMS on launch day (including video for those with the 3GS), the push notification system has worked flawlessly so far (I've been beta testing it), and they have internet tethering working as well (which also works very well - I'll be coming back to the price of this later).

What can I do?

So if you're read this and been swayed over to rational thinking rather than getting involved in the anti-O2 hype, what are your options for upgrading to the iPhone 3GS?

Wait. I know it's difficult but you don't need the iPhone 3GS. If you had a great need for video then you would have bought a different phone in the first place. If you bought your iPhone 3G on launch day, you should have 6 months of you contract left and this may be reduced to 0 months, 3 months, or 5 months depending on how much you've spent recently - take a look at the O2 Priority List for details.

Buy a Pay As You Go iPhone 3GS and sell your old iPhone 3G on eBay (or to a friend, etc). You get the full functionality, get to keep your number, and it'll all work on launch day just by putting your existing SIM card into the new phone. Visual Voicemail, etc, will work as you'll still be on a contract plan (the hardware between the contract and PAYG phones is the same). Plus you might even make money this way as the iPhone 3G's are selling for a high price - best to get on their quickly though!

Pay off your existing contract. This route is the most expensive but you could pay off your existing contract and take out a new one - the price is generally the number of months remaining times your monthly rate so in my case it was £270 (£45 x 6). This is the easiest way but the most expensive.

Take out a second contract. This is what I'm going to be doing next Friday. Rather than paying off my contract in one lump sum, I'll keep that contract and my iPhone 3G (I use it for app testing) but I'll just get a new contract with the iPhone 3GS. This means I'll be paying for 2 contracts for around 6 months but that spreads the cost a bit rather than having one big hit all at once. Also, you can downgrade your iPhone contract one level after 9 months so I'll be able to move my iPhone 3G down to the £35 plan this month (and the £30 the month after) which will save some money - it also means I have a spare, live phone in case I need it for anything. This is again an expensive method but it spreads the cost rather than having it all in one hit - however, it may be subject to a second credit check to make sure you can afford two contracts at once.

What should O2 do?

To my mind, this whole saga could have been avoided if O2 still offered 12 month contracts. Of course, the main problem is that phones are getting more and more expensive and operators want to keep overall contract prices as low as possible so they look competitive. I believe it was 3 mobile who were the first to bring in 18 month contracts as they could do so incredibly cheaply - they got a huge market share simply because people didn't realise what they were signing up for.

So, on the one hand you could have 12 month contracts but charge people a large amount a month so you cover both subsidy and airtime, or you can have 18-24 month contracts which are cheaper but then the customer has to pay out if they want to upgrade to the latest and greatest phone. Both have their issues and overall the cost is probably the same; the real difference is when you pay it. Personally, I would like to see mobile operators offer all three services so people can choose straight up if they want 12 months (high price, low lock-in), 18 months (medium price, medium lock-in), or 24 months (low price, high lock-in). That would avert this whole discussion on upgrading to new phone models!

Conclusion

So there you have it - O2 haven't done anything wrong, they are simply doing the same as every other provider has done since they were created. We can shout all we like but they won't back down on this issue as it doesn't make any financial sense for them to do so. If you are desperate for the iPhone 3GS, then you'll just have to pay for it or wait it out a bit longer.

Now, if I've convinced you to stop petitioning O2 about iPhone 3GS upgrades, perhaps I can convince you instead to spend your time petitioning them about their ridiculous Internet Tethering charges as that is a fight I believe can be won - £15 for 3GB of transfer is far, far too much and I can't see any justification for it! So, give up the 3GS battle and instead shout about the tethering charges :)

![[123/365] Mastering phpMyAdmin 3.1](http://farm4.static.flickr.com/3601/3606999757_83df2cf84f.jpg)

![[54/365] Designing for the Social Web](http://farm4.static.flickr.com/3588/3394810309_19db8a7dbb.jpg)