Yesterday was the start of WWDC 2018 and one new feature in iOS 12 caught my attention amongst the many that were demonstrated:

The demo was part of the new Shortcuts system and showed that apps could reveal some functionality to Siri, in this case the Tile app being able to search for your keys. Once the keynote was over and the documentation went live, I had a dig through and was intrigued to find a new “custom intent” within SiriKit exposed as INObject. This is paired with a full demo app in the form of Soup Chef that shows how you can create these custom intents and use them as shortcuts for Siri. The most interesting thing about this is the following concept from the Soup Chef overview:

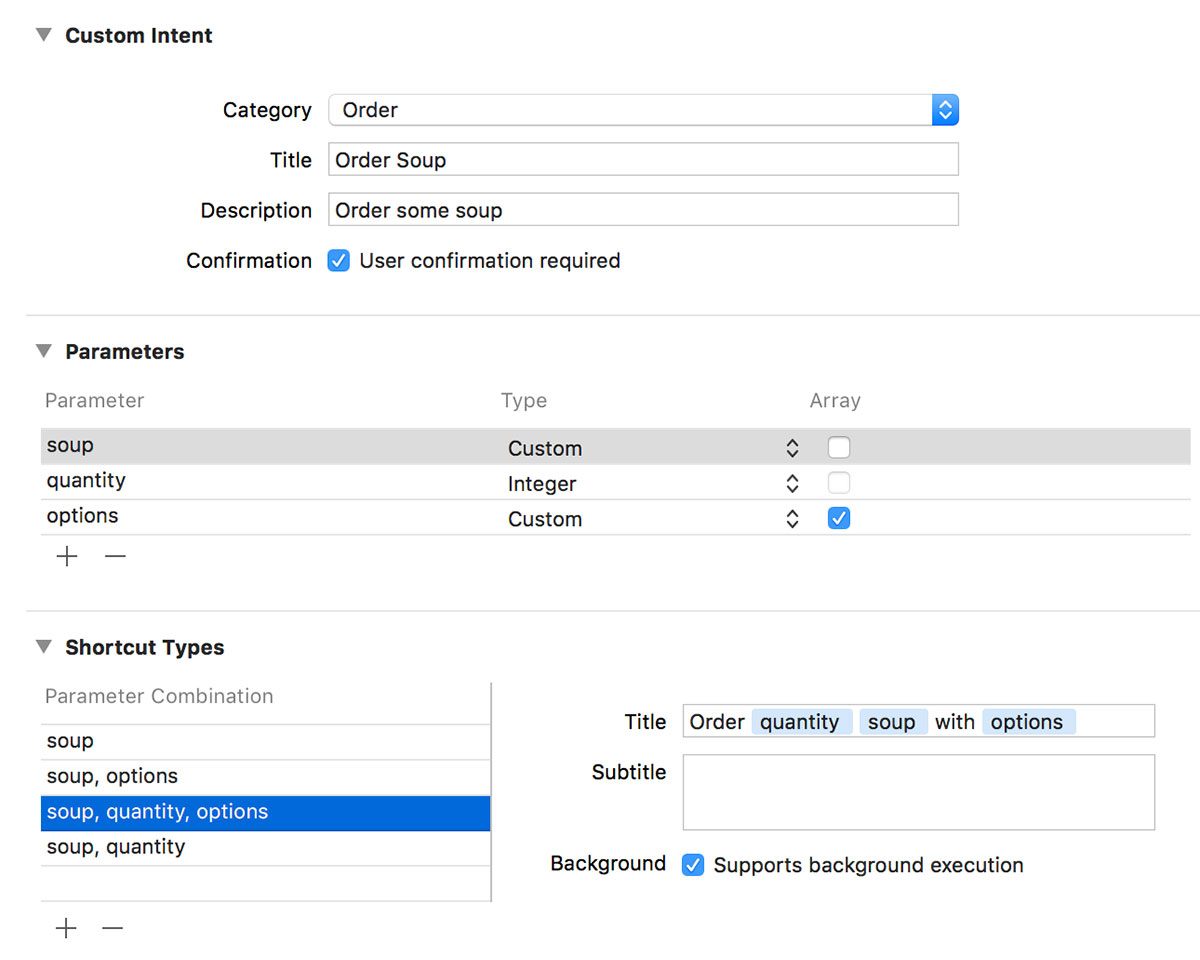

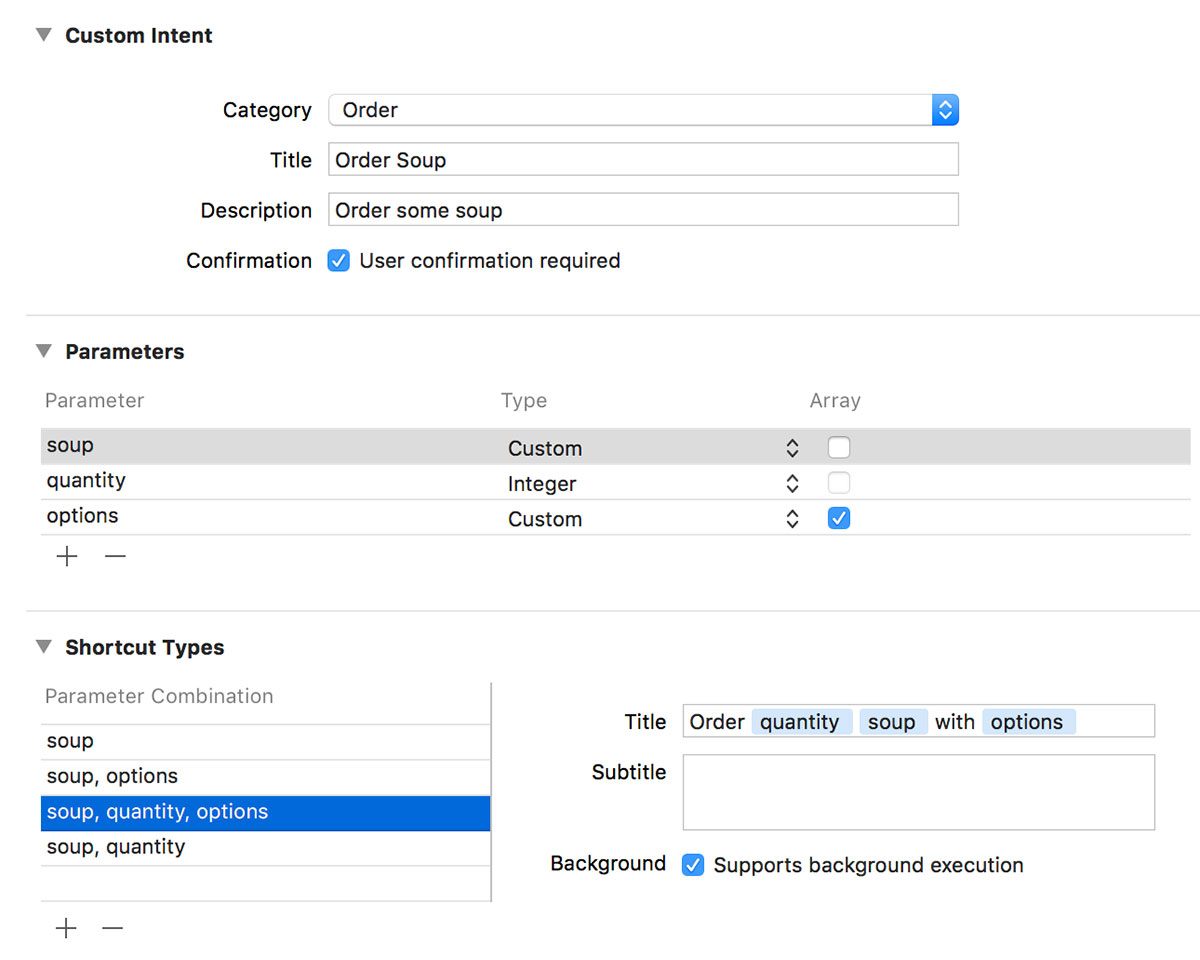

These types define the schema that Siri uses to identify requests the user makes; for example, “Order tomato soup with cheese.” The parameter combination for this example is: soup and options.

When I dug into the code, I found this new Intents.intentdefinition file with which you can create custom intents complete with paramater binding.

This sure looks like the much anticipated ability to write your own Siri code!

What this isn’t

It turns out that isn’t the case. The new custom intents are for “Siri the all-seeing widget assistant” not for “Siri the thing you control with your voice”. These custom intents are designed to be created for very specific use cases and then exposed as shortcuts so that you can access them quickly from your lock screen, add them to a workflow, or activate them with a custom voice command that the user creates. Despite the schema being present and the documentation alluding to voice control, you cannot create your own custom commands such as “Order tomato soup with cheese”.

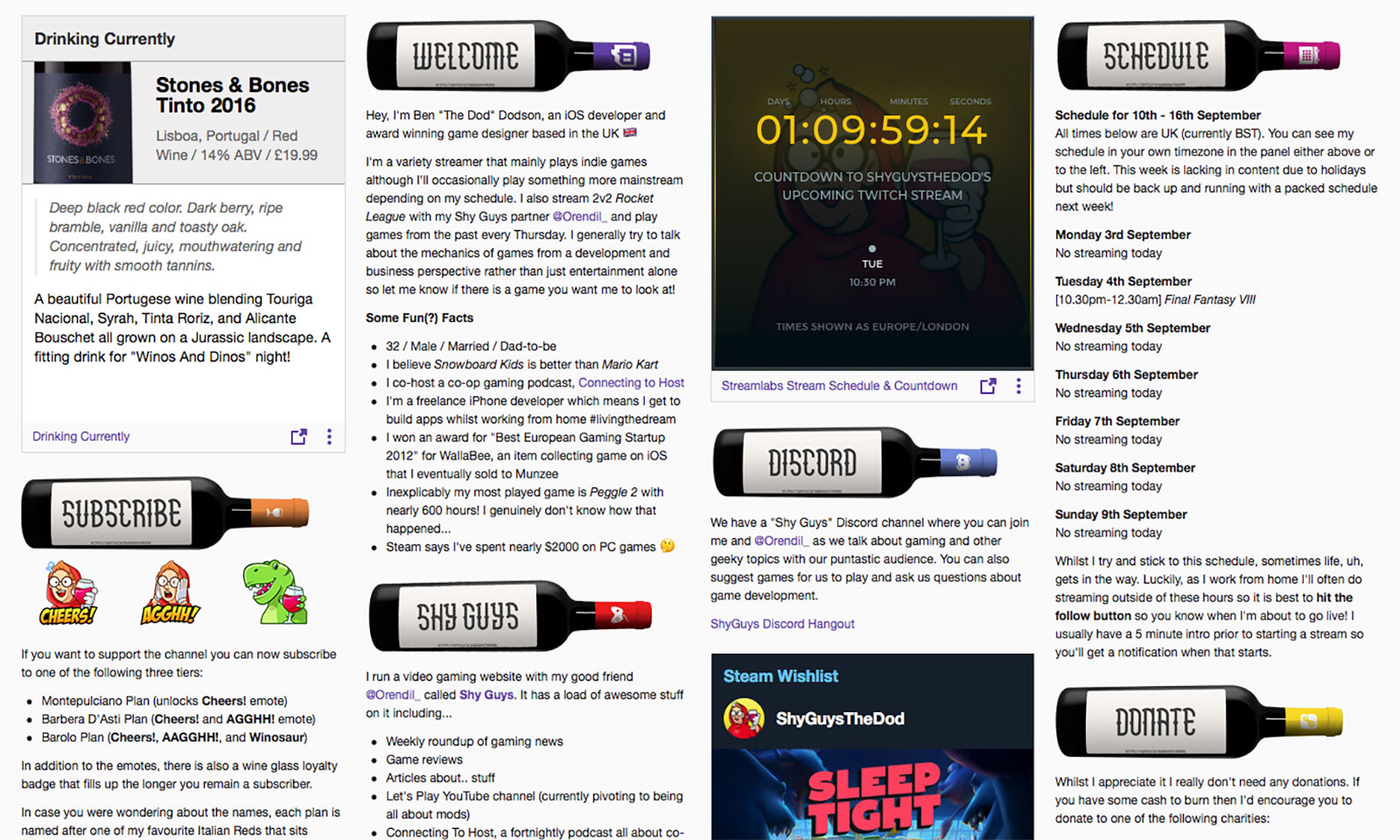

By way of an example, I have my own app that I use to update my gaming time on my ShyGuys gaming website and I was hoping to be able to use this system to say “Hey Siri, add 0.8 hours of gaming time to Skyrim on the Switch”. In parameter based terms this would be “add [hours:decimal] of gaming time to [title:string] on the [console:enum]”. Unfortunately this is not yet possible although the system shows promise for this future.

Before I go into how this system works and the intended use case, there is one extra thing in SiriKit that will please many developers; Media Intent Domain which effectively allows you to use Siri to control media apps such as Spotify, Audible, or Overcast once the developers add the necessary updates.

Custom Intents and Shortcuts

If you are unable to write custom Siri scripts, what then is the point of the new custom intent? It is designed to give you a quick shortcut to commonly used tasks.

In many ways, the Tile app is the perfect demo as it really only does one thing which is to find a specific object. The developers of Tile could create a custom intent of the sort “Find [tile:custom]” and when the app first launches on iOS 12 they can donate an INIntent for every Tile that you own; this basically registers the shortcut with the system so you are telling Siri that there is a “Find Keys” intent, “Find Remote” intent, “Find Dog” intent, etc. These intents are exposed to the user as Shortcuts both within the Settings app and in the new Shortcuts app. Every time you use the Tile app to find something, the specific intent for that device can be re-donated to the system which helps Siri learn and enables it to prompt you when you may need to do this. For example, if you open your Tile app every morning at 8am and tap on your “Keys” Tile to find it, then that “Find Keys” intent is donated to the system helping Siri realise it should probably show you that intent just before 8am. How does it show an intent? By displaying it as a Shortcut on your lock screen, notification centre, Apple Watch, or within the Shortcuts app where it can then be paired with other Shortcuts from other apps (i.e. you could have an “I’m running late” workflow which sends an iMessage to your boss, activates your find keys intent, loads up your route to work in Maps, and opens the garage ready for you to jump into your car).

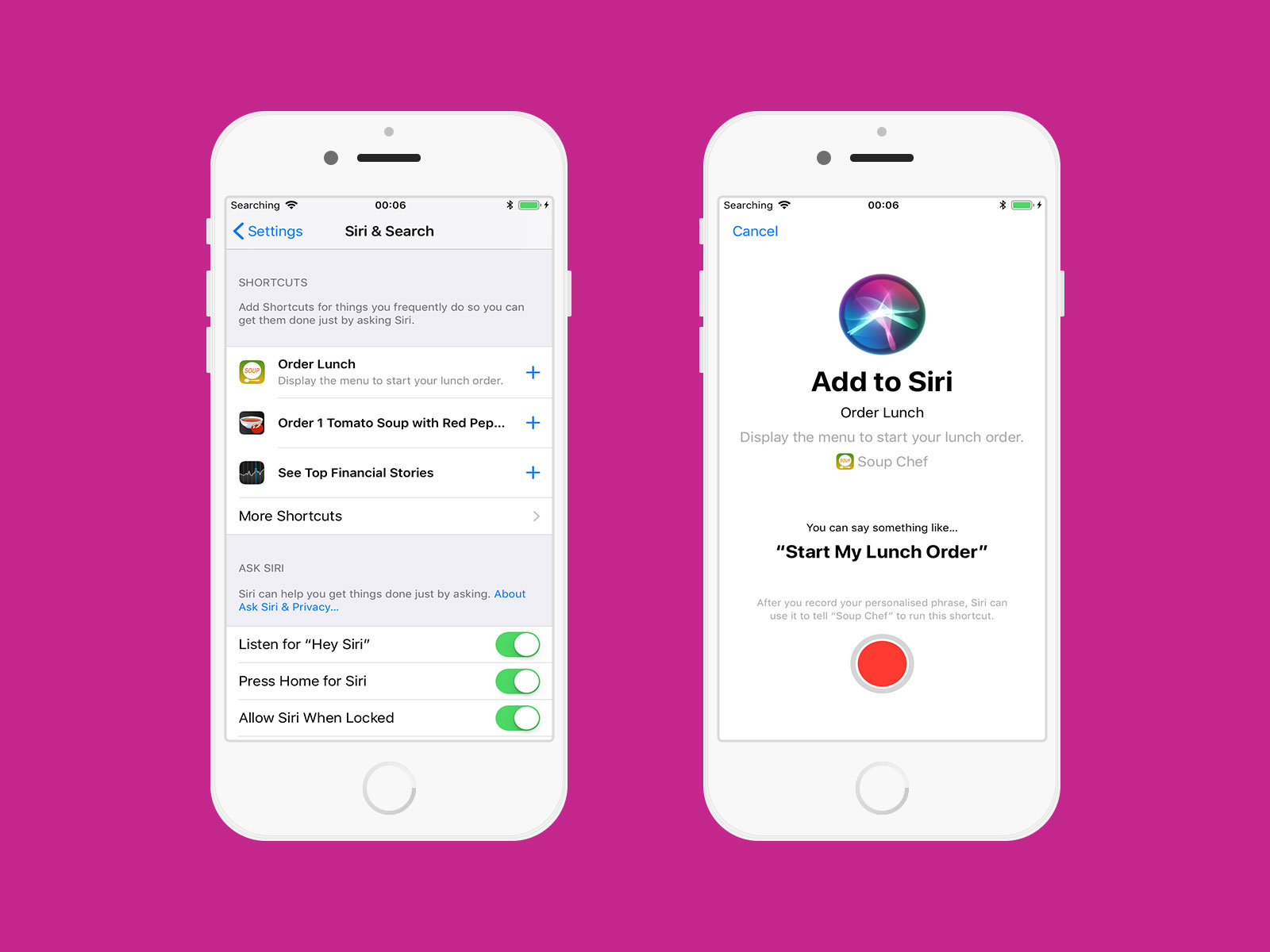

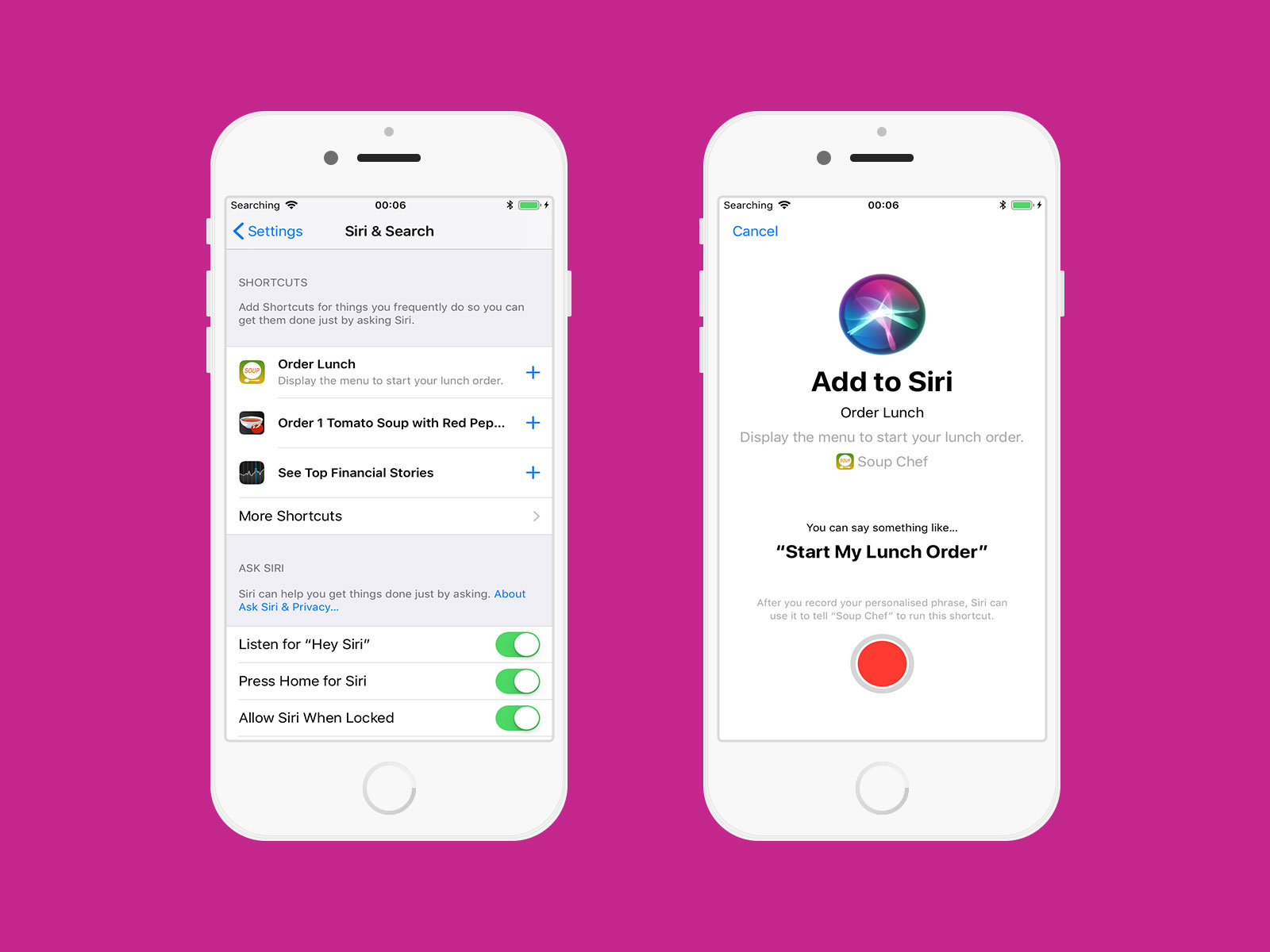

The piece that makes this slightly more confusing is that you can add a custom Siri voice command to a Shortcut. When Craig demonstrated saying “Hey Siri, I lost my keys”, that is really just a voice command on the “Find Keys” custom intent and is highly specific to that particular Tile; you’d have to record a new one if you wanted to find your TV Remote Tile. These Shortcut Phrases can be created either from within the Settings app or an app can present a view controller (complete with a suggested command text) that lets the user record their custom snippet.

When a Shortcut is invoked (either by a Shortcut Phrase or by tapping on a Shortcut) it can either launch your app in the foreground or fire up your INExtension that will allow you to then return a custom UI directly within Siri. Both have their uses although again they are fairly specific.

By way of an example, lets say I order a Chinese takeaway every Friday night via the Just Eat app. When I place my order, the app could create two custom intents:

- A generic intent for the takeaway venue:

"Order from [name:string]"

- A specific intent for my meal:

"Order [menuitems:array] from [name:string]"

The first one could launch the Just Eat app and take me directly to the menu for the takeaway I order from so I can peruse and then place my order. The second one would instead be able to place my regular order without opening the app and even provide custom UI to perform an Apple Pay transaction within Siri.

This is super powerful when combined with other Shortcuts as I could then record a Shortcut Phrase “Hey Siri, it’s Friday Friday got to get down on Friday” which would turn on my living room lights, open up the Netflix app on my Apple TV, lock the front door, and place my Chinese order.

The fact that these Shortcuts can be created silently by the app and then donated to Siri so it can then suggest them to you at certain points is also super interesting. Siri already knows to show the Just Eat app in my Siri App Suggestions on a Friday night so having it in the future automatically prompt me to place a repeat order will cut out some time. Once lots of apps add support for this it will be cool and perhaps a little scary to see what regular habits we have that we didn’t even realise.

(Update: It turns out that apps have been creating Shortcuts since as far back as iOS 8. If you make use of NSUserActivity then these are donated automatically when calling becomeCurrent() or you can use the donate(completion:) method of INInteraction since iOS 10 to donate any of the standard SiriKit interactions such as starting a workout, initiating a voip call, or booking a ride. Any app that has done this, regardless of whether it has been updated for iOS 12, will show in the Shortcuts system.)

To be clear, this system is not yet at the same stage as Alexa or Google Home. You can’t say “Order half crispy aromatic duck and some egg friend rice from Peking House” without first having already placed that order and assigning a Shortcut Phrase to it. However, the jump to that system suddenly doesn’t seem so far. Siri is already getting all of the data it needs thanks to the intents parameter builder and I can’t shake the feeling that these custom Shortcut Phrases are just going to be used to train Siri to lots of different words over the coming year. There is going to need to be some clever work to avoid collisions but on the whole I’m excited to see where this heads next.

All of the topics above are due to be covered at WWDC today and tomorrow at the following sessions:

- Tuesday 5pm: “Introduction to Siri Shortcuts” [link]

- Wednesday 10am: “Building for Voice with Siri Shortcuts” [link]

- Wednesday 11am: “Siri Shortcuts on the Siri Watch Face” [link]

Also, don’t forget to check out the Soup Chef demo app.