Scalable bulleted lists with UILabel or UITextView

I’ve recently been implementing auto-renewable subscriptions for a client and came across the need to create a bulleted list of notes1. There are numerous tutorials available that show how you can do this but all of the ones I found had a flaw of some kind be it using fixed values for bullet widths or not taking variable font sizes from Dynamic Type into consideration.

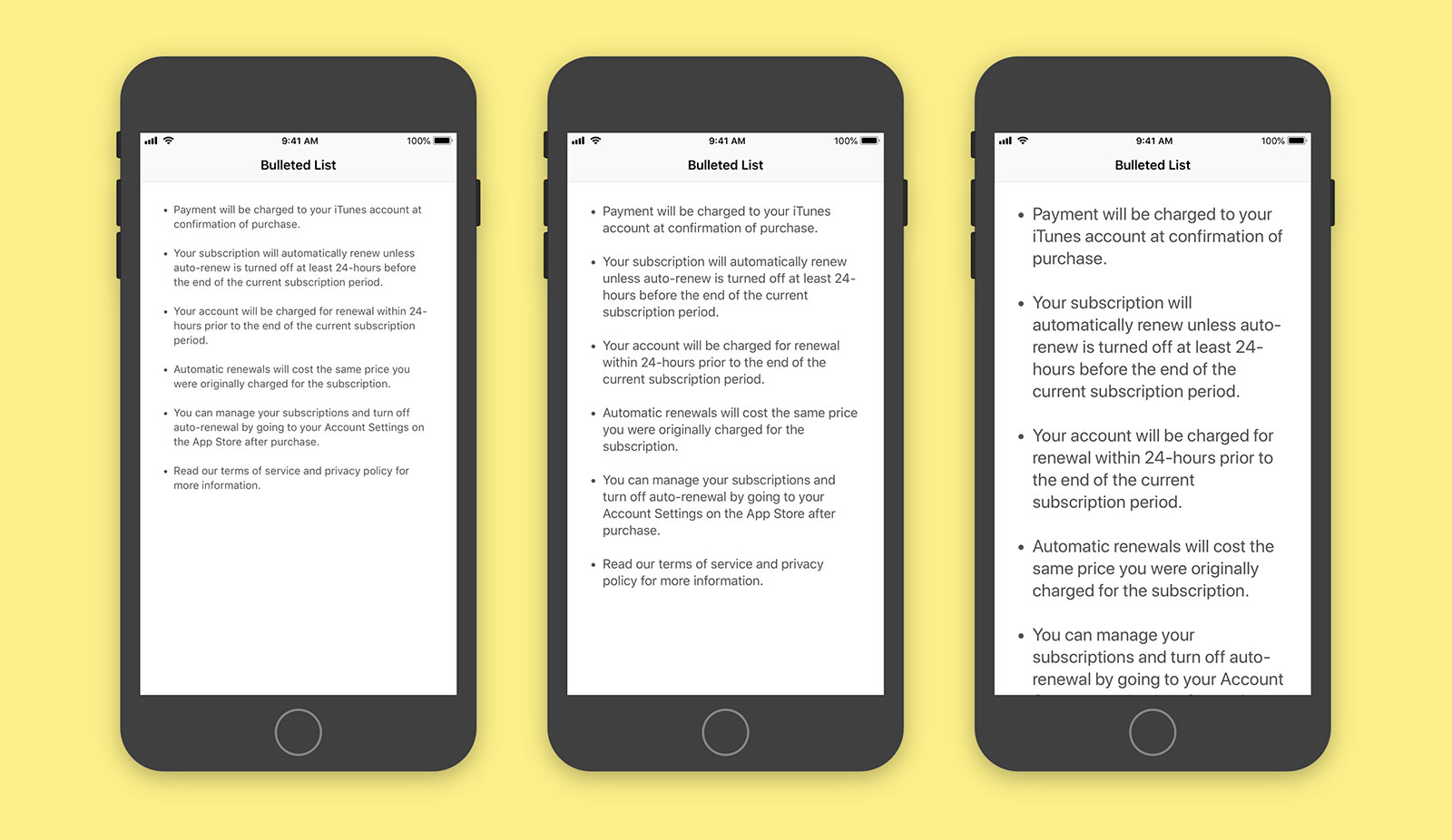

Here, then, is a quick primer on how you can add correctly aligned bullets to a list be it in a UILabel or UITextView and have it scale correctly dependent on the users text size preferences.

class ViewController: UIViewController {

@IBOutlet weak var label: UILabel!

override func viewDidLoad() {

super.viewDidLoad()

NotificationCenter.default.addObserver(self, selector: #selector(updateUI), name: .UIContentSizeCategoryDidChange, object: nil)

updateUI()

}

@objc func updateUI() {

let bullet = "• "

var strings = [String]()

strings.append("Payment will be charged to your iTunes account at confirmation of purchase.")

strings.append("Your subscription will automatically renew unless auto-renew is turned off at least 24-hours before the end of the current subscription period.")

strings.append("Your account will be charged for renewal within 24-hours prior to the end of the current subscription period.")

strings.append("Automatic renewals will cost the same price you were originally charged for the subscription.")

strings.append("You can manage your subscriptions and turn off auto-renewal by going to your Account Settings on the App Store after purchase.")

strings.append("Read our terms of service and privacy policy for more information.")

strings = strings.map { return bullet + $0 }

var attributes = [NSAttributedStringKey: Any]()

attributes[.font] = UIFont.preferredFont(forTextStyle: .body)

attributes[.foregroundColor] = UIColor.darkGray

let paragraphStyle = NSMutableParagraphStyle()

paragraphStyle.headIndent = (bullet as NSString).size(withAttributes: attributes).width

attributes[.paragraphStyle] = paragraphStyle

let string = strings.joined(separator: "\n\n")

label.attributedText = NSAttributedString(string: string, attributes: attributes)

}

}

The first thing to determine is the bullet you want to use. I like to have a • (press option + 8) with two spaces afterwards. We store this in a variable and then build a String array with which we’ll populate each line of our list2. These are then mapped to append the bullet we chose to the front of each string.

let bullet = "• "

var strings = [String]()

strings.append("First line of your list")

strings.append("Second line of your list")

strings.append("etc")

strings = strings.map { return bullet + $0 }Next we create the base attributes of our label or text view such as the font size and colour. As we want the text to scale dependent on the users own text preferences, we use Dynamic Type via preferredFont(forTextStyle: .body) although you can obviously use any font. The bulk of the heavy lifting is done by an NSParagraphStyle attribute called headIndent which adds a fixed amount of padding to all but the first line of a paragraph. We can determine the size that this indent should be by casting our bullet as an NSString and then providing our previously created attributes to the size method. This gives us the width of the bullet and any spacing you added afterwards in the exact font and size you have chosen.

var attributes = [NSAttributedStringKey: Any]()

attributes[.font] = UIFont.preferredFont(forTextStyle: .body)

attributes[.foregroundColor] = UIColor.darkGray

let paragraphStyle = NSMutableParagraphStyle()

paragraphStyle.headIndent = (bullet as NSString).size(withAttributes: attributes).width

attributes[.paragraphStyle] = paragraphStyleFinally we join our string with line breaks (strings.joined(separator: "\n\n")) and create an attributed string with the attributes including the new paragraph style.

This all works but there are two more things you’ll need to do to support dynamic font scaling. First of all you’ll want to ensure that the ‘Automatically Adjusts Font’ checkbox is selected in Interface Builder for your label or text view3. Secondly, you’ll want to be notified when the content size changes (i.e. when the user goes to the Settings app and increases or decreases the text size) by subscribing to the UIContentSizeCategoryDidChange notification and regenerating your label. I prefer to do this in a method named updateUI but your personal preference may vary.

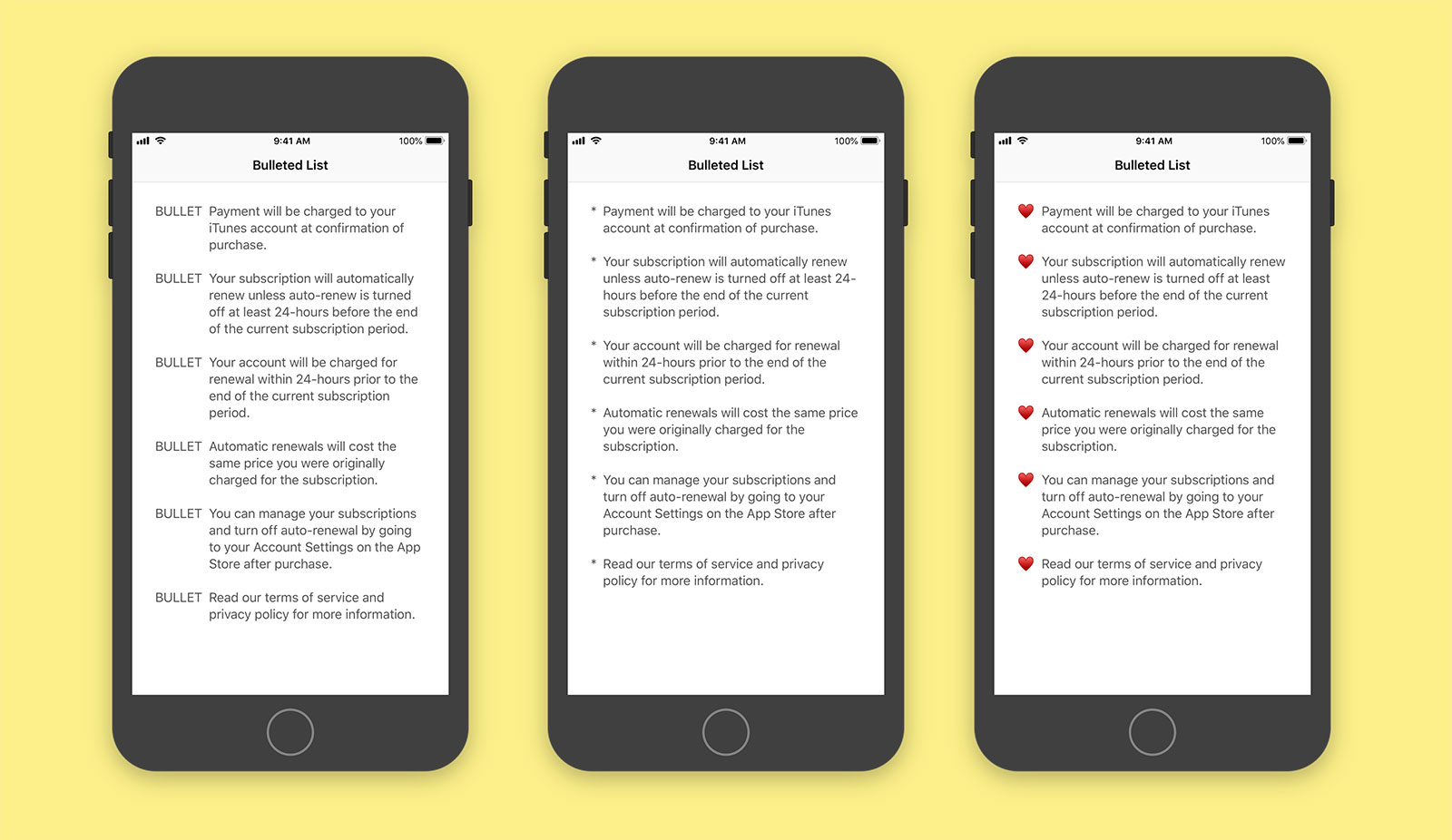

The nice thing about this setup is that it is entirely fluid, doesn’t require any 3rd party dependencies, and can be used with any mixture of bullet types be they a single character, a word, or even emoji:

I’ve uploaded a basic project to GitHub to demonstrate this code in action. Hopefully this article will serve as a reminder that you don’t need to import 3rd party libraries to achieve basic text formatting and that you should always be wary of text code that doesn’t take font scaling into account.

-

Sourced from the excellent tutorial by David Barnard. ↩︎

-

Don’t forget to use

NSLocalizedString- I didn’t bother for the sake of brevity in this article. ↩︎ -

Alternatively you can use the

adjustsFontForContentSizeCategoryboolean onUILabelandUITextView. ↩︎