Revival

I’m pleased to announce the release of a new client app I’ve been working on over the past few months: Revival, truly uncomplicated task planning for everyone:

I was originally contacted by Wonderboy Media with the intention of working on updating their existing reminders app which was looking a little dated and had some issues with broken functionality. After a detailed examination, it was determined that a complete rebuild was needed in order to make a sustainable v2.0 which would last as a solid foundation for many years to come. I worked as the sole iOS developer on the project over many months1 as I got to grips with what was a deceptively complex project including such things as local notifications (with snoozing), timezones, locations, subtasks, priorities, lists, tags, notes, files, contacts, subscriptions, integration with the iOS Reminders app, and Siri support! In addition, I completely redesigned the app giving it a far more modern look and feel complete with fluid gestures, sounds, and haptics. I even redesigned the app icon!2 It should also go without saying that the app was built entirely in Swift 5 using AutoLayout to ensure a flexible design from the 4.7” iPhone SE right up to the 12.9” iPad Pro including the various adaptations that can occur when multitasking on iPadOS.

One of the most complicated pieces was a desire for syncing not only between devices but also via sharing and sending tasks between users. I’m particularly proud of the seamless and accountless syncing system within the app which uses your CloudKit identifier along with Firebase in order to provide real time syncing between devices – you can literally complete a task on your iPhone and see it update in milliseconds on your iPad! In addition, everything is stored locally on device using Realm in order to keep things incredibly fast and to provide advanced searching capabilities. When it came to sharing a task with another user, I removed the old system which required creating user accounts and sending codes back and forth and instead created a system which provides a simple URL; when opened, the app pulls all of the information you need quickly and securely to either create a copy or grant access to the shared task.

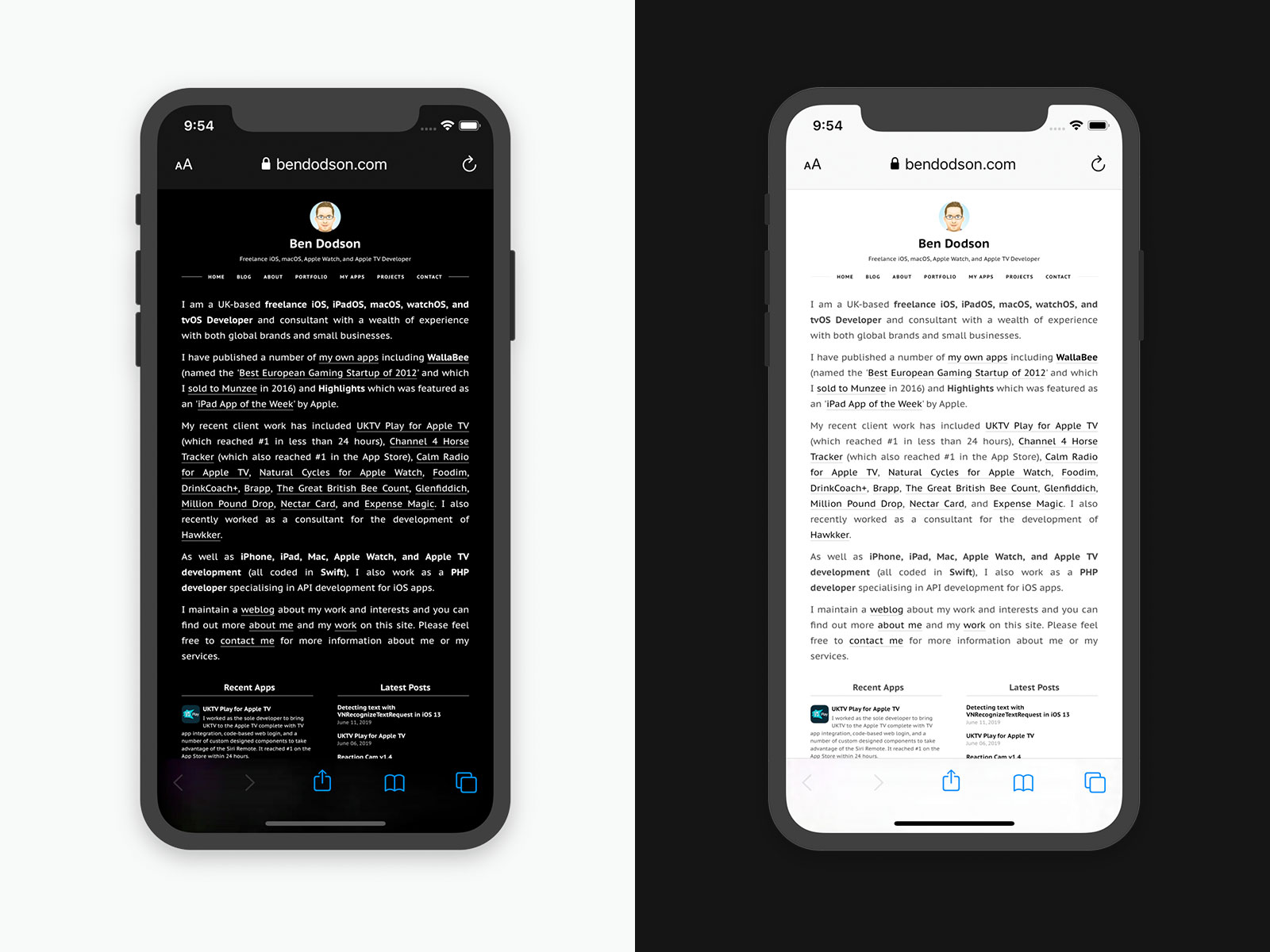

Another complex piece revolved around theming in that users can change both the overall colour of the app and also switch between a light and dark mode. This required a custom solution for being able to change all of the UI on a whim whilst also recognising that iOS 13 was around the corner and would likely include a dark mode. The work I put in paid off and I was able to easily add an “automatic” mode that would change the theme between light and dark based on the iOS preferences3 when iOS 13 was launched.

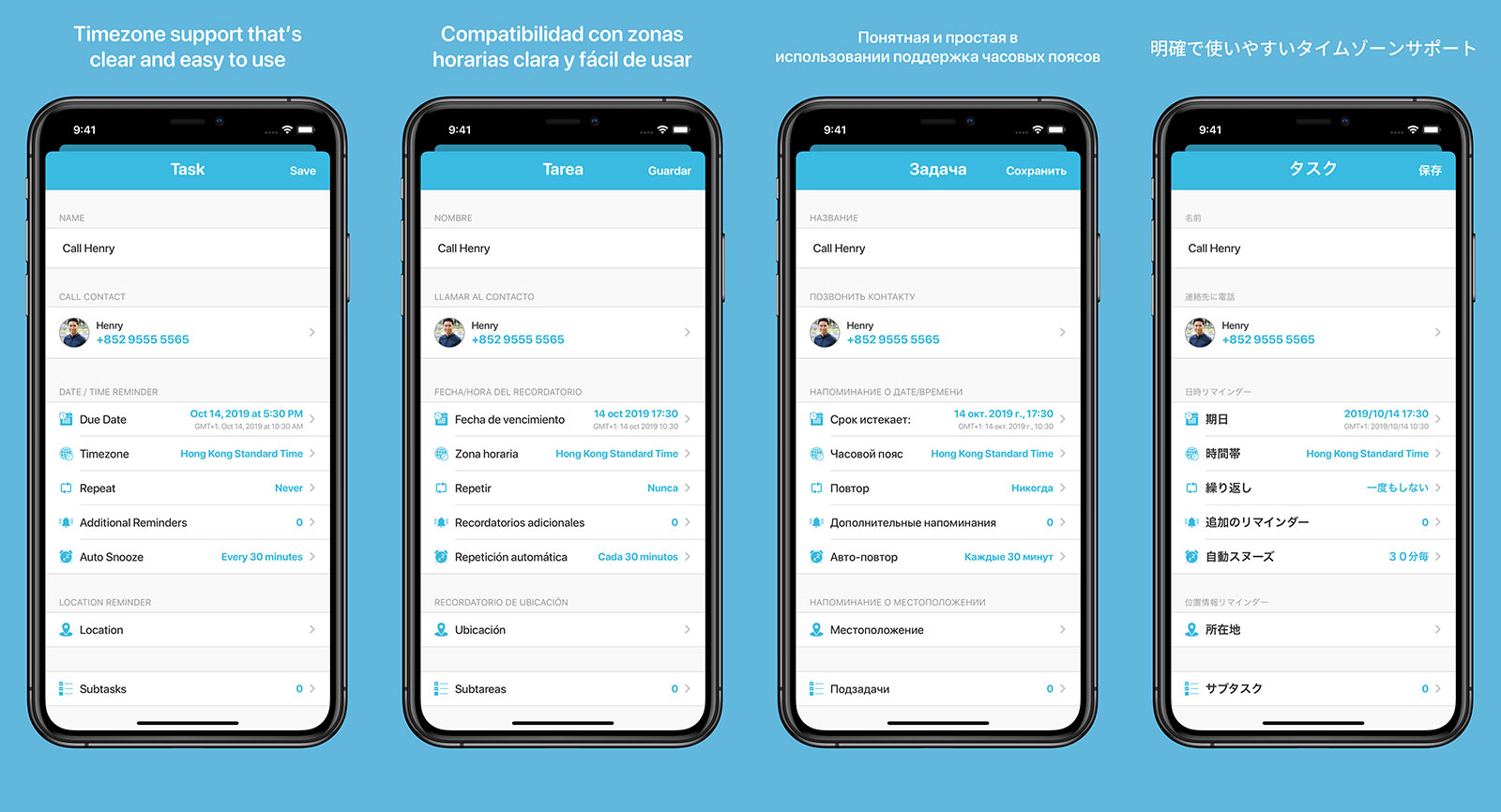

Whilst there are many aspects to this project that provided additional complications4, one of the most satisfying to work on was the localisation of the app to eight additional languages. We worked with Babble-on in order to get the required translation files which were easily loaded into the app but I also wanted to improve the experience on the App Store. The previous versions of the app had custom artwork for each language with translated text above but showed the same English interface. I wanted to automate this and improve it, especially as Apple required screenshots for four devices across nine languages. The solution was to use the XCTest framework (along with a custom data loader) to open the app at various pages and take snapshots; these were then used by the deliver and snapshot parts of Fastlane to wrap them in a device frame and add the localised text. The result is 180 screenshots each with localised text, the correct frame for the targeted device, and a localised screenshot.

I really enjoyed working with Wonderboy Media on this project and having the opportunity to work on such a complex project. I’m incredibly pleased with both the design and development work that I’ve put into this project and I’m excited to see how it grows in future.

You can download Revival on the App Store for free and subscriptions are available to gain access to advanced features.

-

Another developer has now been added to the team to help with a number of exciting new updates which will be rolling out over the next few months. ↩︎

-

As a reminders app it’s almost law that you have to use a tick but I wanted to have a homage to the skeuomorphic clock-based interface of the old app as well. My solution was to make a clock face with the tick rendered from the hands. ↩︎

-

This is far more complex than it seems as if you choose to have iOS change between light and dark automatically based on the time of day then it is possible for the theme to change whilst you are in the middle of using the app; every view needed to be able to handle the possibility that the underlying theme could change at any second rather than just occurring from the settings panel. ↩︎

-

Auto renewable subscriptions and supporting promoted in-app purchases, getting around the 64-notification limits of the UNUserNotificationCentre when you have 300 notifications to load in, how to efficiently deal with syncing contact information when it could be different on each device, etc. Don’t even talk to me about recreating the entirety of the custom repeat options from the iOS Reminders app! ↩︎